AI is moving fast.

Maybe a little too fast.

One of the newest trends is AI-powered browsers.

What Exactly Is An AI-Power Browser?

While a traditional browser simply loads pages, an AI browser, on the other hand, helps you think.

An AI-powered browser isn’t your regular browser.

It’s like having a built-in assistant that can summarize pages, answer your questions in plain English, and even suggest content tailored to you.

Instead of just dumping a list of links, it actually works smarter because it gives you direct answers, automates repetitive tasks, and understands what you’re really looking for.

The goal?

To make browsing faster, easier, and way more personal.

An AI browser combines a regular web browser with built-in AI models, like GPT-4.1, that process what you’re doing in real time.

And while they sound convenient, there’s a serious risk we need to talk about: something called “prompt injection.”

So, what does that mean?

Let’s break it down.

Large language models (LLMs), the tech behind chatbots like ChatGPT, Claude, and Gemini, respond to prompts.

That’s just a fancy word for the questions and instructions you give them.

Simple enough.

But here’s the catch: these AIs can’t always tell the difference between a rule they’re supposed to follow (like “don’t write ransomware”) and a request coming from you, the user.

And that’s where things get messy.

Recently, Brave (the browser company that built its own AI assistant, Leo) decided to test this.

They wanted to see if an AI browser could be tricked into following hidden, malicious prompts.

The results?

Not great.

As Brave put it:

“As users grow comfortable with AI browsers and begin trusting them with sensitive data in logged in sessions – such as banking, healthcare, and other critical websites – the risks multiply. What if the model hallucinates and performs actions you didn’t request? Or worse, what if a benign-looking website or a comment left on a social media site could steal your login credentials or other sensitive data by adding invisible instructions for the AI assistant?”

Yep, that’s the nightmare scenario.

So What Is Prompt Injection?

Think of it as hacking with words instead of code.

Instead of breaking into servers or finding software bugs, attackers simply tell the AI what to do using hidden or sneaky instructions.

In AI browsers, part of the input comes from the websites you visit.

That means malicious instructions could be buried inside a page, invisible to you but crystal clear to the AI.

Imagine white text on a white background; easy for AI to read, invisible to your eyes.

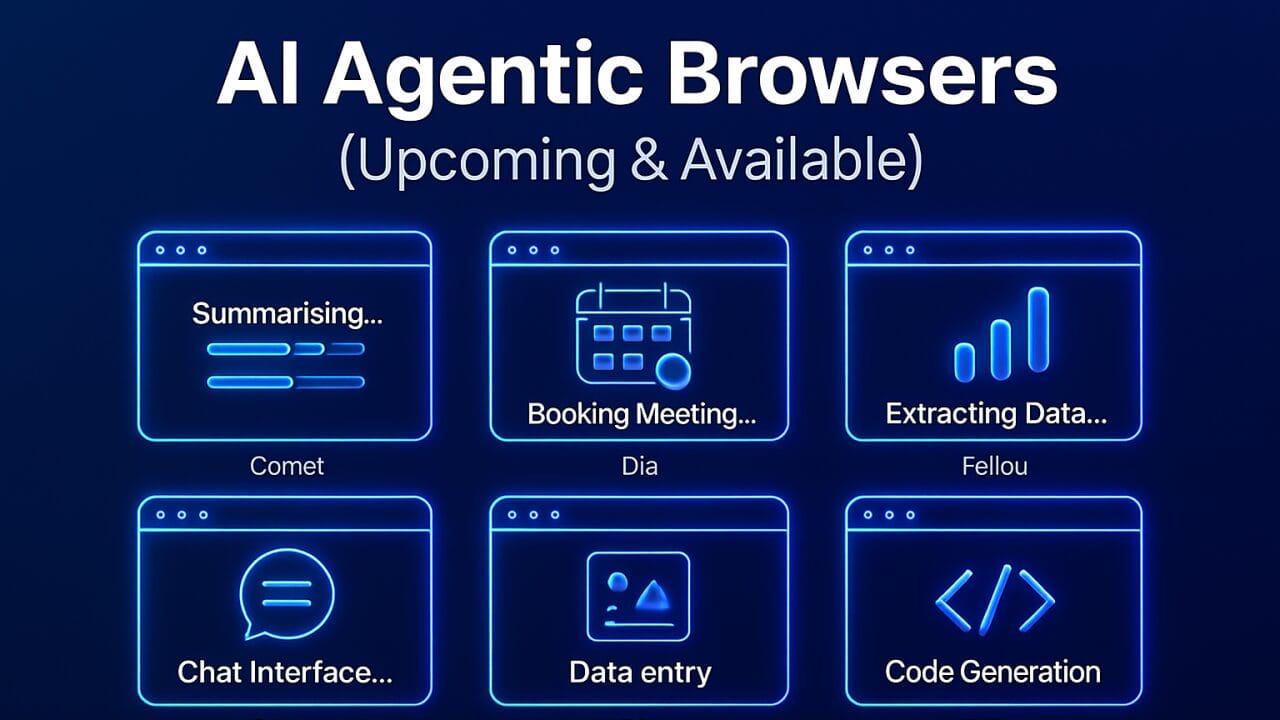

AI Browsers Vs. Agentic Browsers

Now here’s where it gets more concerning.

An AI browser is basically a helper.

It can answer questions, summarize pages, or recommend content.

But you’re still the one clicking, approving, and guiding.

An agentic browser, on the other hand, takes things a big step further.

This isn’t just a browser; it’s an assistant that acts for you.

It can fill out forms, make purchases, book appointments, even handle full workflows.

Picture this: You say, “Find the cheapest flight to Paris next month and book it.”

An agentic browser can do all of that – research, comparison, booking, payment – without you lifting a finger.

Convenient?

Absolutely.

Risky?

Even more so.

Because if a malicious site feeds your browser hidden instructions, suddenly it’s not just browsing.

It could be spending your money or leaking sensitive info without you realizing it.

Or, as one person on X put it:

“You can literally get prompt injected and your bank account drained by doomscrolling on reddit”

Scary thought, right?

Brave’s Research

Brave found that Perplexity’s Comet (another AI browser) still has weaknesses in this area.

Even after fixes were attempted, it’s still vulnerable to indirect prompt injections.

And remember, these attacks don’t require advanced hacking skills.

Just clever wording.

So How Do We Protect Ourselves?

Like with anything cutting-edge, agentic browsers come with serious risks.

If you’re tempted to try them, keep this checklist in mind:

- 🔑 Be picky with permissions – only give access to sensitive data when absolutely necessary.

- 🔍 Double-check sources – don’t let your browser auto-interact with shady sites or links.

- ⬆️ Keep everything updated – security patches matter.

- 🔒 Use strong authentication – think multi-factor authentication and activity monitoring.

- 🧠 Stay informed – understanding prompt injection is your first defense.

- 🛑 Don’t fully automate high-stakes stuff – especially payments. Always require a manual review.

- 🚨 Report weird behavior – if your AI browser starts asking strange questions or making odd moves, flag it immediately.

The bottom line: AI browsers—especially agentic ones—are powerful, but they’re also a brand-new attack vector for hackers.

And the weapon of choice?

Not code… just words.

Let me know your thoughts on this in the comments below.

All the best,

Gary Nugent

Check out my Instagram posts and reels here:

Follow me (@aiaffiliatesecrets) on Instagram

P.S.: Don't forget, if you want to create an internet income of your own, here's one of my recommended ways to do that: